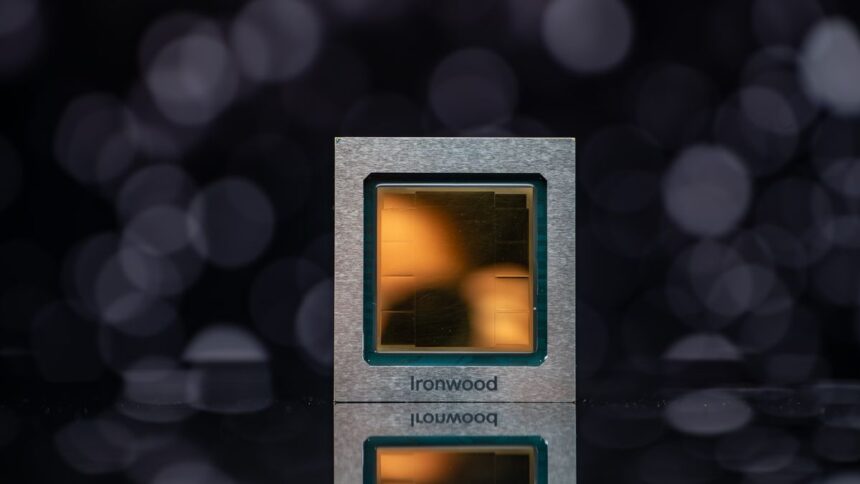

On Wednesday (April 9), during the Google Cloud Next 2025 event, Google introduced its next-generation tensor processing units (TPUs), codenamed Ironwood. This marks the company’s seventh generation of TPU technology—and the first one built specifically for AI inference workloads.

The launch of Ironwood signals a major shift in Google’s AI infrastructure strategy. While previous generations of TPUs were primarily optimized for training and deploying AI models, Ironwood was built from the ground up with a singular focus on inference—the stage where already-trained models are used in real-world scenarios to deliver outputs, insights, and intelligent responses.

According to Google, Ironwood is their most powerful, efficient, and scalable TPU to date, aligning with what the company is calling the start of the “inference era” of artificial intelligence.

Impressive specifications for increasing demands

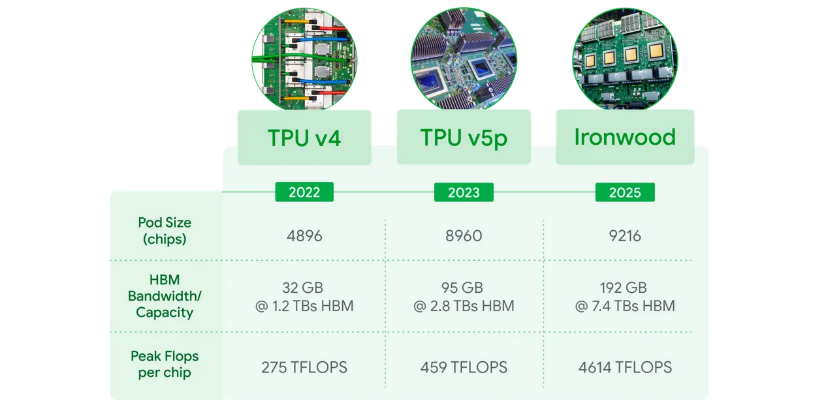

The newly unveiled Ironwood TPU represents a major leap forward in performance compared to its predecessor, Trillium. Each individual Ironwood chip delivers a staggering 4,614 teraflops of peak computational power and is equipped with six times more High Bandwidth Memory (HBM) than the previous generation.

Bandwidth has also seen dramatic improvements. Ironwood chips support up to 7.2 terabits per second of memory bandwidth—4.5 times higher than Trillium. Communication between chips (Interchip Interconnect) now reaches 1.2 Tbps of bidirectional bandwidth, marking a 50% increase over the previous generation.

One of the standout features of Ironwood is its upgraded SparseCore, a dedicated accelerator optimized for ultra-efficient processing in complex workloads like classification and recommendation systems. This upgrade allows Ironwood to expand beyond conventional AI applications into areas like financial modeling and scientific computing.

For Google Cloud customers, Ironwood will be available in two deployment options:

- PODs with 256 chips

- PODs with 9,216 chips

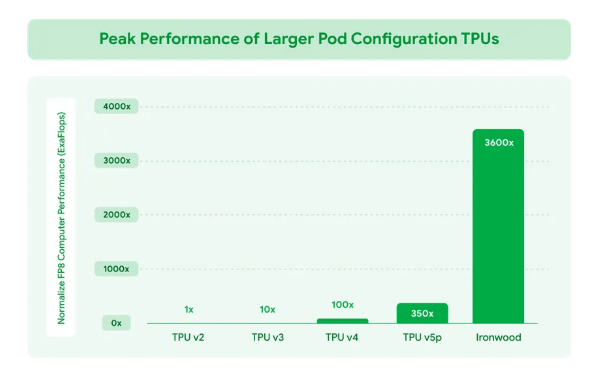

In its highest configuration, Ironwood delivers an astonishing 42.5 exaflops of compute power—more than 24 times the capacity of El Capitan, currently the world’s largest supercomputer, which boasts 1.7 exaflops.

Strategic focus on AI inference

Google’s decision to design a TPU specifically for inference wasn’t made lightly. During the announcement, the company emphasized that we’re now entering what it calls the “Age of Inference”—a new phase in AI where models don’t just respond with real-time data, but actively generate insights and interpretations on their own.

“It is a change of responsive AI models that provide real -time information to be interpreted by people, for models that provide the proactive generation of insights and interpretation,” explained Amin Vahdat, VP and GM of ML, Systems and Cloud AI from Google during the ad.

This shift in how AI is used demands a new kind of infrastructure—one built for “thinking models” like large language models (LLMs), Mixture of Experts (MoEs), and other advanced reasoning systems. These workloads require massive parallel processing, ultra-fast memory access, and extreme efficiency—exactly what Ironwood was designed to deliver.

Why focus on inference now?

Google’s growing focus on inference signals a pivotal moment in the evolution of artificial intelligence. As models become more complex and context windows continue to expand, the computational burden is shifting away from training and landing squarely on inference—the phase where models are actually put to use.

Modern AI applications now involve multi-step reasoning and processing of longer, more dynamic inputs. As a result, the efficiency of inference—how quickly and economically a model can generate useful output—has become more critical than how fast it can be trained.

While much of the industry remains laser-focused on accelerating training for ever-larger models, Google appears to be one step ahead, anticipating the real challenge: scaling inference efficiently. With Ironwood, Google is tackling this bottleneck head-on, delivering a solution purpose-built to meet the demands of next-gen AI workloads.

Impact on Google’s Cloud and Ecosystem services

Ironwood isn’t just a powerful new chip—it’s a cornerstone of Google Cloud’s broader AI strategy. Fully integrated into Google’s AI Hypercomputer architecture, Ironwood enables the delivery of smarter, faster, and more energy-efficient services across the company’s entire cloud ecosystem.

One of Ironwood’s standout advantages is its exceptional energy efficiency. Google claims it delivers twice the performance per watt compared to its predecessor, Trillium. In an era where energy constraints are one of the biggest barriers to scaling AI infrastructure, Ironwood’s ability to offer significantly more compute per watt is a major leap forward.

This is made possible through an advanced liquid cooling system and a chip design that allows for up to twice the sustained performance of traditional air-cooled systems—even under intense and continuous workloads. Google notes that Ironwood is nearly 30 times more energy-efficient than the first cloud TPU it released back in 2018.

For developers and engineers, Ironwood offers raw computational power and a more streamlined and flexible software ecosystem. With the debut of Runtime Pathways on Google Cloud, users can now benefit from features like disaggregated inference—which separates “prefill” and “decode” tasks into independently scalable workloads, maximizing performance and throughput.

Some of Google’s most advanced models—such as Gemini 2.5 and the Nobel Prize–winning AlphaFold—are already running on TPUs. With Ironwood, Google aims to fuel the next wave of breakthroughs in AI, whether developed in-house or by its growing base of cloud customers.

Availability is still for 2025

While Google hasn’t announced an exact launch date, the company confirmed that Ironwood will become available to Google Cloud customers sometime in 2025. The new TPU will be seamlessly integrated into the AI Hypercomputer architecture, designed to handle the most intensive and complex AI workloads.

Importantly, developers won’t need to overhaul their existing tools to exploit Ironwood. It will support widely used frameworks like PyTorch and JAX, making it easy for teams to transition or scale up their projects. Additionally, users will be able to leverage Runtime Pathways, a system developed by Google DeepMind, which intelligently distributes tasks across multiple TPUs for maximum efficiency and performance.