The competition to develop the best AI hardware also emphasizes the need for greater energy efficiency. AMD recently announced that it has reached its goal of significantly improving the efficiency of its AI-focused chips ahead of schedule.

Back in 2020, AMD, under the leadership of CEO Lisa Su, outlined an ambitious plan to reduce energy consumption in servers equipped with EPYC CPUs and Instinct accelerator GPUs by 30x by 2025. Originally projected to take five years, AMD achieved this milestone in just four, demonstrating remarkable progress in energy-efficient computing.

This achievement highlights AMD’s commitment to pushing the boundaries of performance while addressing energy concerns in the rapidly expanding AI industry.

Focus on increasingly efficient AI chips.

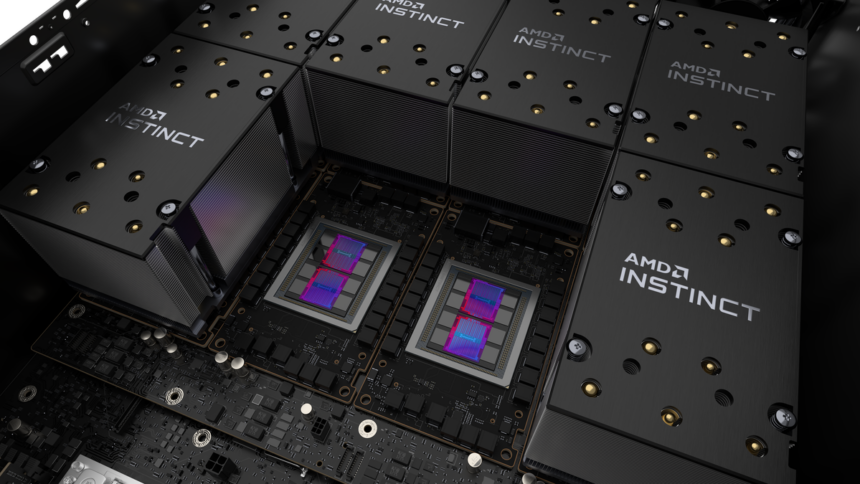

Internal testing with AMD’s latest components for AI-focused servers, a segment where the company has been steadily growing, has demonstrated remarkable efficiency gains. A server configuration featuring two 64-core EPYC 9575F processors, eight Instinct MI300X GPUs, and 2.3 TB of DDR5 memory was shown to be 28.3 times more efficient than equivalent setups from 2020.

Although the exact specifications of the 2020 configuration weren’t disclosed, it would have likely included EPYC 7002 CPUs based on the Zen 2 architecture and MI100 GPUs using the CDNA 1 architecture. This efficiency boost—nearly 30x—is validated by inference tests conducted on Llama 3.1-70B, a large-scale language model.

According to AMD, these impressive gains were made possible through a combination of architectural advancements and software optimizations.

This achievement is particularly significant for the AI chip industry, where power efficiency is critical as servers grow in size to handle large-scale inference for advanced models like those used by Meta, Microsoft, Google, and other tech giants. Such workloads demand massive computational power, making AMD’s breakthrough a milestone in energy-efficient AI hardware development.