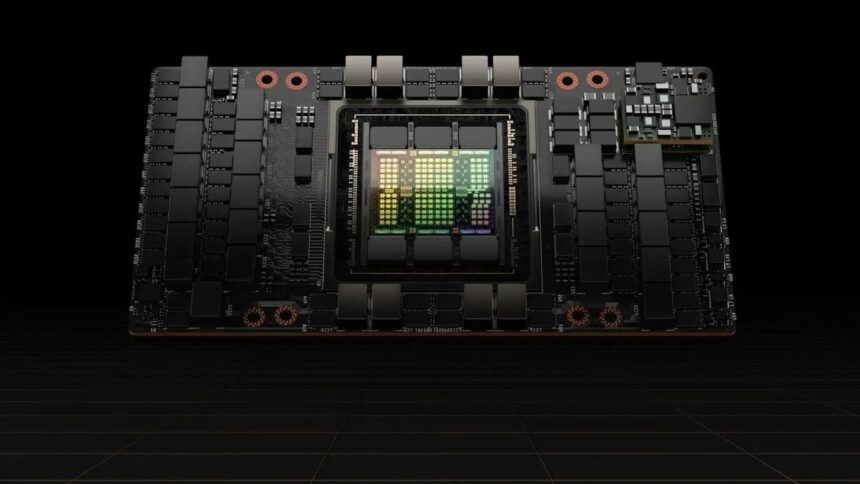

Mark Zuckerberg, the CEO and founder of Meta, has disclosed that he is utilizing a massive cluster of NVIDIA H100 GPUs to train the latest version of his artificial intelligence model, Llama 4. This impressive setup comprises over 100,000 GPUs and is reportedly running at full capacity.

Zuckerberg stated that Llama 4 is set to introduce “new modalities,” possess “strong reasoning,” and operate “much faster” than the large language models (LLMs) currently in use. His strategy aims to ensure that Meta remains competitive with other tech giants like Microsoft, Google, and Elon Musk’s xAI, all of which are also in the race to develop next-generation LLMs.

Interestingly, Meta isn’t the only company deploying such a vast GPU array. Earlier in July, Elon Musk revealed that he was using a similar setup to train Grok, xAI’s AI model, with plans to double its capacity to 200,000 GPUs.

However, Zuckerberg appears to have even grander ambitions, projecting that Meta could scale up to 500,000 GPUs by the end of 2024. This escalating competition among technology leaders is likely to intensify as they push the boundaries of artificial intelligence development.

Differences between Llama 4 and the others

Meta’s approach to Llama 4 sets it apart from other large language models (LLMs) currently available, as it will be offered free of charge to all users. This means that researchers, companies, and even government agencies can access and utilize the technology without incurring any costs.

In contrast to OpenAI’s GPT-4o and Google’s Gemini, which require users to interact with the AI through a paid API, this accessibility could significantly boost the popularity of Zuckerberg’s Llama 4 in the market.

However, it’s essential to note that Meta does impose certain restrictions on the use of Llama 4. For instance, commercial applications of the model are prohibited, and the company has not disclosed details about the training processes behind the AI. This combination of free access and restrictions could shape how Llama 4 is adopted and utilized across various sectors.

Concerns about energy consumption

Zuckerberg’s announcement raises significant concerns about the energy consumption associated with the operation of 100,000 NVIDIA H100 GPUs. If each GPU has an annual energy requirement of 3.7 MWh, this implies that Meta’s GPU cluster could potentially consume around 370 GWh of electricity each year.

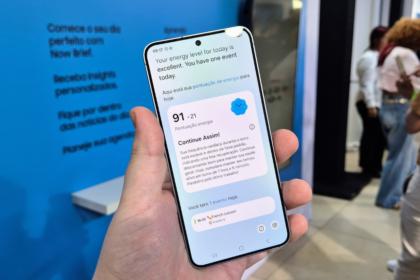

To put this into perspective, that level of consumption is comparable to the energy usage of approximately 34 million homes, or nearly three times the energy needed for the entire city of São Paulo if all homes were powered simultaneously.

Zuckerberg has acknowledged that energy limitations could hinder the expansion of artificial intelligence, yet this awareness hasn’t deterred major tech companies from investing in increasingly large data centers for AI training. Companies like Microsoft, Google, Oracle, and Amazon are all exploring nuclear power as a solution, though they are still in the initial phases of developing modular reactors and retrofitting power plants to ensure they have a reliable energy supply for their AI needs in the future.

NVIDIA’s new step towards GPUs

In addition to the initiatives from Meta and other tech giants, NVIDIA is also making significant strides in the AI landscape with the upcoming launch of its “Blackwell” B200 GPUs, set to arrive in early 2025. These new GPUs are anticipated to deliver even greater performance, further energizing the AI market.

Reports suggest that the entire initial production run of the Blackwell GPUs has already been sold out, leading NVIDIA to pause new orders until the second batch is manufactured. While these GPUs are expected to offer enhanced capabilities, there is a caveat: their energy consumption is anticipated to exceed that of the current H100 models, raising additional concerns about the sustainability of AI infrastructure in the industry.

.