Microsoft has introduced a preview of a new patch tool for its Azure AI platform, designed specifically for developers working with generative AI applications. This tool addresses the issue of AI “hallucinations,” where the system generates inaccurate or fabricated information in its responses.

Previously, the Azure platform could detect and block unreliable responses. The new patch feature, however, goes further by allowing developers to directly edit and replace the AI’s incorrect answers with accurate ones.

Katelyn Rothney, Azure AI Content Security Product Marketing Manager, emphasized the importance of this capability, stating, “Empowering consumers to understand and take action on unsubstantiated content and hallucinations is crucial, especially as the demand for reliability and accuracy in AI-generated materials continues to increase.”

This update is part of Microsoft’s ongoing efforts to improve the trustworthiness and accuracy of AI outputs as demand for generative AI tools grows.

How the feature works

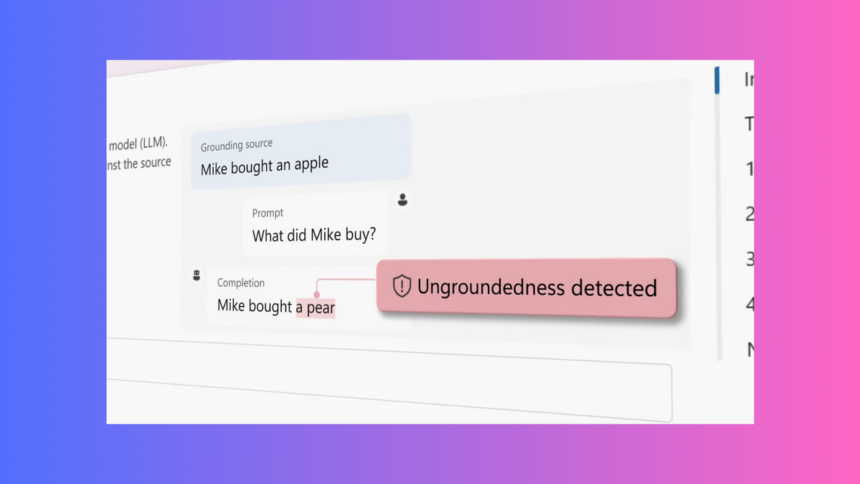

To utilize the new patch tool on the Azure platform, developers must first activate the feature within the Azure AI Content Security service. Once enabled, the service detects possible hallucinations—instances where the AI generates inaccurate or fabricated information—and highlights these problematic sections for the developer.

The tool explains why the identified text is incorrect, and developers can also instruct the system to automatically rewrite the inaccurate material, ensuring that the revised response aligns with the AI model’s data sources. This process ensures that the final user does not encounter erroneous content but receives the corrected reliable information.

Microsoft emphasizes that this new feature can enhance user experience by delivering more accurate responses rather than blocking questionable outputs. Additionally, it opens doors for building more reliable AI solutions in high-risk areas like healthcare, where accurate information is critical.

Hallucinations, a well-known challenge in generative AI systems like ChatGPT and Gemini, have been a significant concern. While eliminating hallucinations is complex, researchers at the University of Oxford have developed a probability model to help mitigate these occurrences, offering a promising avenue for improving AI reliability.