Microsoft has recently introduced an exciting update to its small language model (SLM), Phi Silica, by adding the ability to “see.” This upgrade enhances Phi Silica’s capabilities, particularly in areas like recall, by allowing the model to better understand images.

With this improvement, Phi Silica has become multimodal, meaning it can now process and interpret images in a much more advanced and sophisticated way. This opens up new possibilities for productivity and accessibility features, offering novel tools for users.

The company shared all these details in an official blog post, explaining how the new functionality works and the benefits it brings, particularly in making it easier for users with disabilities to interact with their PCs.

What is Phi Silica, and how does it work?

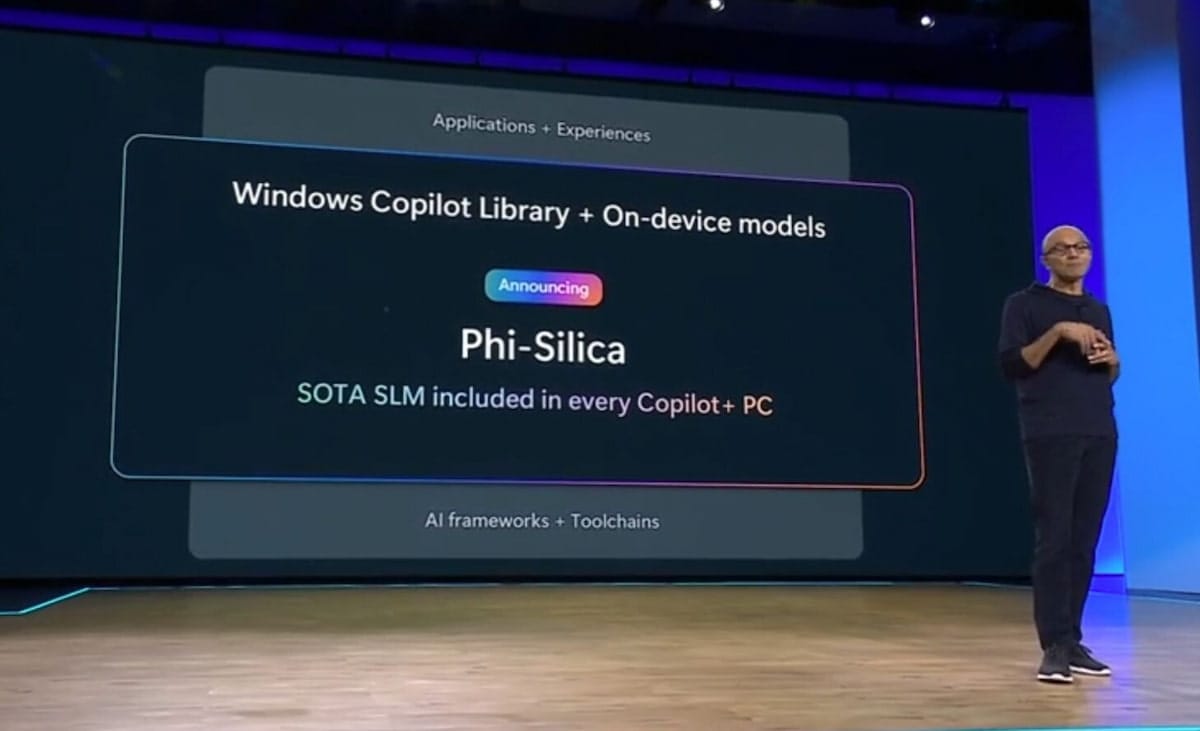

Phi Silica is a small language model (SLM) developed by Microsoft that is designed to be a lighter version of larger AI models. Its primary purpose is to integrate seamlessly into the Windows environment, specifically enhancing the functionality of Windows Copilot+.

Think of Phi Silica as the local AI engine that powers various features in Windows, such as Windows Copilot Runtime. It enables tasks like generating text summaries directly on your device, meaning everything happens locally rather than in the cloud. This not only improves speed but also reduces energy consumption.

One of Phi Silica’s notable features is its role in the Windows Recall function. This function captures screenshots of what’s on your screen and acts as a sort of memory bank for your activities. You can later search for information from past actions using natural language, making it easier to find what you’ve done previously.

Microsoft has caused Phi Silica to become multimodal, and this offers many benefits

It’s important to highlight Microsoft’s impressive achievement with Phi Silica, especially in terms of efficiency. Rather than creating new components from scratch, Microsoft has leveraged existing ones and added a small “projector” model to introduce vision capabilities.

This approach helps reduce resource consumption, which is a notable benefit. As mentioned earlier, Phi Silica’s new multimodal abilities will unlock many new AI-driven experiences, such as the ability to describe images.

Currently, these features are available only in English, but Microsoft plans to expand to other languages, broadening the model’s potential use cases.

For now, the multimodal capabilities of Phi Silica will be accessible exclusively on Copilot+ PCs with Snapdragon chips. However, Microsoft intends to eventually make this available on devices powered by AMD and Intel chips as well.

How did Microsoft make Phi Silica “see” images?

As mentioned earlier, what Microsoft has achieved with Phi Silica is truly remarkable and deserves recognition. There’s a lot of innovation behind this development.

Originally, Phi Silica could only understand text—words, letters, and written content. However, instead of building entirely new components to serve as a “brain” for this capability, Microsoft took a more creative and efficient approach.

Microsoft introduced an expert system designed to analyze photos and images to bring image understanding to Phi Silica. This system was trained to recognize key elements within the images. From there, the company created a translator that could convert the information extracted from the images into a format Phi Silica could process.

By doing this, Microsoft effectively taught Phi Silica to “speak” the language of images. This allowed the system to link its understanding of visual content to its existing database of words and knowledge.

What helps Phi Silica be multimodal?

As you may know, Phi Silica is a small language model (SLM) developed by Microsoft. Essentially, it’s an AI designed to understand and generate natural language, much like its larger counterpart, the large language model (LLM). However, Phi Silica stands out due to its significantly smaller size, with fewer parameters, making it more efficient while still offering powerful capabilities.

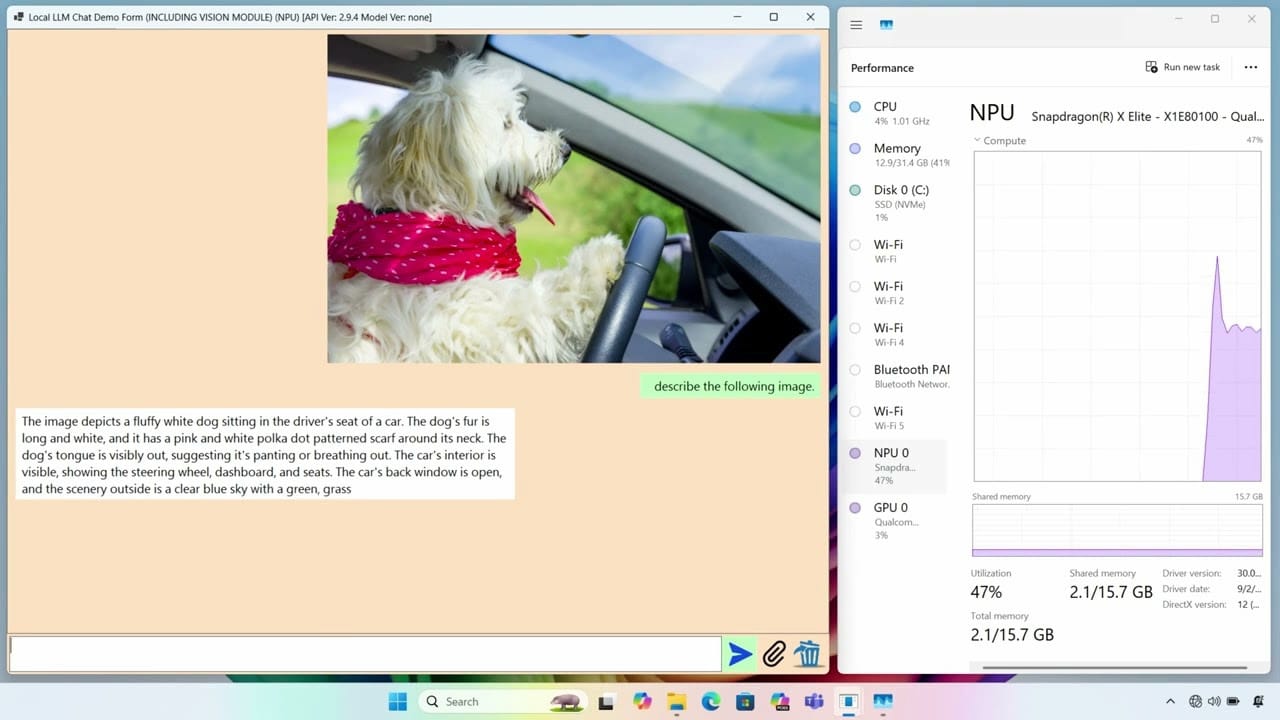

Previously, Phi Silica powered functions like Windows Recall and other intelligent features. Now, it’s been upgraded to become multimodal, which means it can not only understand text but also interpret images.

Microsoft has shared several examples of the new possibilities opened up by this multimodal upgrade, especially in terms of accessibility for users.

For instance, Phi Silica can now assist individuals with visual impairments. If a user encounters an image on a website or document, Phi Silica can generate a detailed, textual description of that image. This description can then be read aloud by the PC’s assistive tools, making web content more accessible.

This improvement also extends to users with learning difficulties. Phi Silica can analyze what’s displayed on the screen and provide contextual explanations or detailed help, offering better support for those who may struggle with understanding certain content.

Additionally, Phi Silica can be useful for identifying objects, reading labels, or interpreting text on various elements displayed on a screen. The potential applications of this enhancement are vast and could significantly improve the experience for many users.